Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- References

- Copyright

Enhanced Surveillance: Developing a Computer Vision-based CCTV Footage Analysis Tool for Improved Vehicle Number Plate Recognition

Authors: Ravi Khanna, Shreyash Kumar Sahoo, Mr. Sunil Maggu, Ms. Seema Kalonia, Mr. Ajay Kumar Kaushik

DOI Link: https://doi.org/10.22214/ijraset.2024.60807

Certificate: View Certificate

Abstract

Smart parking systems serve as integral components in augmenting the efficiency and sustainability of modern urban environments. Nonetheless, prevailing systems predominantly rely on sensors to monitor parking space occupancy, entailing substantial installation and maintenance costs while offering limited functionality in tracking vehicle movement within parking lots. To confront these challenges head-on, our solution introduces a multistage learning-based approach, capitalizing on the existing surveillance infrastructure within parking facilities and a meticulously curated dataset of Saudi license plates. Our approach amalgamates YOLOv5 for license plate detection, YOLOv8 for character detection, and a novel convolutional neural network architecture tailored for enhanced character recognition. We illustrate the superiority of our approach over single-stage methods, achieving an impressive overall accuracy of 96.1%, a notable advancement from the 83.9% accuracy of single-stage approaches. Furthermore, our solution seamlessly integrates into a web-based dashboard, facilitating real-time visualization and statistical analysis of parking space occupancy and vehicle movement, all accomplished with commendable time efficiency. This endeavor underscores the potential of leveraging existing technological resources to bolster the operational efficiency and environmental sustainability of smart cities. By harnessing the power of machine learning and computer vision, our work exemplifies a paradigm shift towards smarter and more adaptive urban infrastructure

Introduction

I. INTRODUCTION

Smart cities epitomize urban landscapes that harness diverse technologies and data reservoirs to elevate citizen well-being and streamline service efficiency. Within this context, smart transportation emerges as a pivotal domain, endeavoring to optimize mobility and safety across urban realms. Among the bedrock elements of smart transportation lie Smart Parking Systems (SPSs), pivotal in mitigating traffic congestion, reducing fuel consumption, curbing air pollution, and minimizing parking search times by furnishing real-time insights into parking availability and locations.

Technological strides, driven by advancements in communication and information realms, have democratized the development of cost-effective SPSs. These systems confer benefits upon car park operators, motorists, and the environment alike. Operators leverage SPS data to forecast parking trends and formulate pricing strategies, while motorists revel in the convenience of locating vacant parking slots and retrieving their vehicles swiftly, thereby truncating travel and search durations. Moreover, SPSs, by curtailing vehicular emissions, contribute tangibly to environmental stewardship.

A gamut of approaches to SPS development permeates the literature. Predominantly, wireless sensor networks, the Internet of Things (IoT), and computer vision-based systems dominate discourse. Yet, designing and deploying SPSs poses myriad challenges. Chief among these hurdles is the imperative to monitor parking space occupancy reliably and economically. Conventional systems, reliant on sensors like magnetic, ultrasonic, or infrared sensors, offer rudimentary occupancy detection but falter in discerning vehicular identities or tracking movement within parking lots. Moreover, their susceptibility to hardware malfunctions, interference, or vandalism impugns their reliability.

To circumvent these limitations, recent scholarship has championed the utilization of computer vision techniques to monitor parking space occupancy via extant surveillance cameras within parking facilities. These methodologies adeptly detect and discern vehicle license plates, thereby updating occupancy status dynamically. In contrast to sensor-centric systems, they yield cost efficiencies, exhibit greater adaptability in tracking vehicular movements, and confer ancillary functionalities such as vehicle identification, access management, security surveillance, and statistical analysis.

This research contributes significantly to the advancement of Smart Parking Systems (SPSs) through the following key innovations:

- Introduction of a Dataset: A novel dataset of license plates, meticulously curated and annotated in-house, enriches the research landscape. This dataset spans diverse scenarios of license plate detection, character detection, and character recognition, serving as a valuable resource for future studies

- Development of a Multistage Learning-Based Approach: Our research pioneers a multistage approach tailored for SPSs, harnessing existing surveillance infrastructure within parking facilities alongside the indigenous license plate dataset. This approach encompasses three distinct stages: license plate detection, character detection, and character recognition, ensuring comprehensive coverage of the parking space monitoring process.

- Training and Fine-Tuning of YOLO Series: Leveraging state-of-the-art YOLO series models—YOLOv5, YOLOv7, and YOLOv8—we achieve remarkable milestones in license plate detection and character detection. Notably, YOLOv5x attains a mean average precision of 99.4% for license plate detection, while YOLOv8x achieves 98.1% for character detection, underscoring the robustness and efficiency of our methodology.

- Proposal of a Novel CNN Architecture: A bespoke Convolutional Neural Network (CNN) architecture designed specifically for license plate character recognition surpasses existing approaches, boasting an impressive accuracy rate of 97%.

- Demonstration of Superiority over Single-Stage Approach: Empirical evidence substantiates the superiority of our multistage approach over the single-stage counterpart (YOLOv8x), showcasing an overall accuracy of 96.1% as opposed to 83.9%, while maintaining reasonable time efficiency.

- Development of a Web-Based Dashboard: A user-friendly web-based dashboard facilitates real-time monitoring and statistical analysis of car park occupancy, serving as a tangible manifestation of our system's efficacy in real-world settings.

These contributions collectively propel the field of SPSs, offering novel methodologies, datasets, and tools for enhanced parking space management and urban mobility optimization.

II. METHODOLOGY

We herein detail our methodology. First, we discuss the proposed system architecture. Then, we describe the stages employed in the proposed deep learning approach. We then present the application of our proposed system to a use case in Saudi Arabia. Finally, we describe the experiment preparation and setup, including the dataset, the hardware, and the software. We also discuss the evaluation metrics that we used to measure the performance of our system.

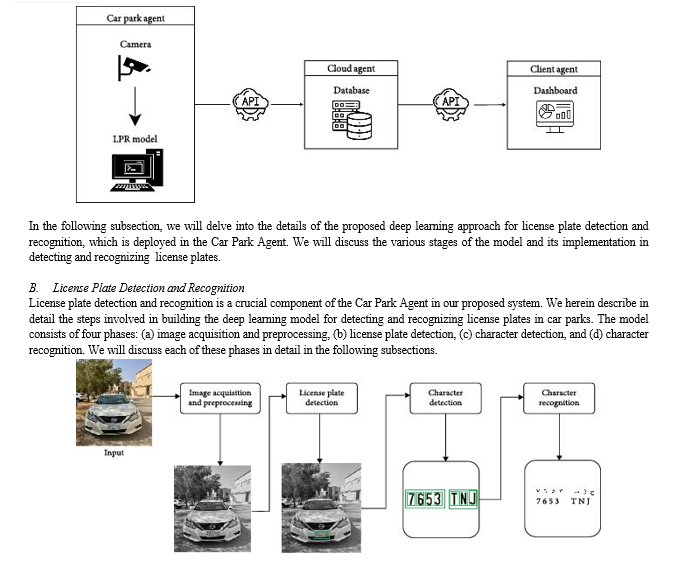

A. System Architecture

The proposed system architecture, comprises three main components:

- The Car Park Agent consists of car park cameras connected to a local PC equipped with the proposed deep learning model to analyze the cameras’ footage by detecting and recognizing car license plates. One camera is positioned at the car park entrance, while additional cameras are located at the entrance of each floor. The deep learning model leverages the state-of-the-art YOLO series and a newly proposed CNN architecture for improved license plate character recognition, achieving high accuracy and acceptable time efficiency.

- The Cloud Agent is a cloud-based database that receives insights from the Car Park Agent via APIs. To maintain privacy and confidentiality, camera footage is not transmitted to the Cloud Agent. Instead, the Cloud Agent receives insights derived from the Car Park Agent’s analysis of the footage, enabling real-time monitoring and analysis of car park occupancy and vehicle movement.

- The Client Agent is a web-based dashboard that retrieves statistical results from the Cloud Agent via APIs. These results are displayed to car park operators, enabling efficient monitoring and analysis of car park occupancy and vehicle movement in real-time.

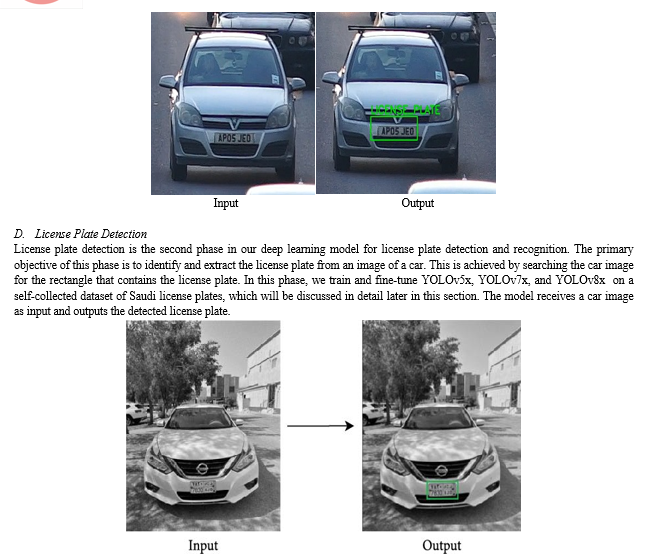

C. Image Acquisition and Preprocessing

Image acquisition and preprocessing is the first phase in our deep learning model for license plate detection and recognition. Image preprocessing plays a crucial role in the object detection model pipeline by highlighting the desired region in the image. During this phase, various filters are applied to the image to remove impurities and enhance its quality. In our work, images are resized to 640 × 640 pixels and converted to grayscale using resize and cvtColor methods from the OpenCV-Python library. These specific image dimensions were chosen to match the standard dimensions used by YOLO models trained on the COCO dataset, enabling the use of transfer learning when training our proposed models in subsequent phases. Images are also denoised using the fastNlMeansDenoising method from the OpenCV-Python library and transformed by increasing their brightness using the ColorJitter (brightness = 0.2) method provided by PyTorch. Moreover, we used augmentation techniques on the training set images when training our YOLO and CNN models on our dataset. These techniques helped us to simulate real-world scenarios in parking systems and improve the generalization of our models. The validation and test sets were not augmented to ensure an accurate evaluation of the proposed model performance. We will discuss the augmentation techniques when we present the training process of the proposed models.

YOLO object detection algorithms are based on the single-shot detection framework, which makes object detection by dividing the input image into grids and predicting bounding boxes and confidence scores for each grid cell. YOLOv5 uses a cross-stage partial network (CSPNet) and Darknet as a backbone, which is responsible for extracting features from the input image. The neck of YOLOv5 is a path aggregation network (PANet), which fuzes features from different levels of the backbone. YOLOv5 also uses adaptive feature pooling to improve the accuracy of object location. YOLOv5 has five different model scales that can gain a tradeoff between size and performance: YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. YOLOv7 introduces the extended efficient layer aggregation network (E-ELAN) as a better version of the ELAN computational block, which enables efficient learning without gradient loss. YOLOv7 uses reparameterized convolutions (RepConv) as the basic building block and applies coarse label assignment for the auxiliary head and acceptable label assignment for the lead head. YOLOv7 has two model sizes: YOLOv7 and YOLOv7x. YOLOv8 is an anchor-free detector that reduces the number of box predictions and speeds up the non-maximum suppression, which is a process of removing overlapping bounding boxes. YOLOv8 employs mosaic augmentation to enhance the training process for various real-world applications. YOLOv8 is considered the state-of-the-art among YOLO object detectors. YOLOv8 has five model scales: YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x.

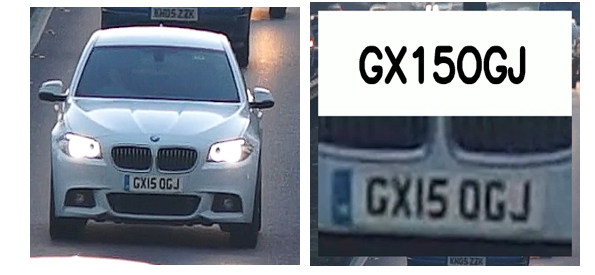

E. Text Extraction from License Plate Images

To conduct text extraction from license plate images for a research paper utilizing EasyOCR, commence by meticulously documenting the setup procedure, including the installation of EasyOCR within the Python environment, and any dependencies required. Clarify the rationale behind selecting EasyOCR, emphasizing its efficiency and ease of use for optical character recognition tasks. Substantiate the methodology by detailing the steps involved, from importing essential libraries to loading the license plate images. Elucidate the importance of image preprocessing techniques to enhance the accuracy of text extraction, such as noise reduction and contrast enhancement. Explicitly outline any parameters configured during text extraction, such as language specifications or character set constraints, to ensure reproducibility. Additionally, discuss any limitations encountered during the experimentation phase, along with potential avenues for future research to address these constraints. Conclude by summarizing the efficacy of EasyOCR in extracting text from license plate images and its implications for various applications, including vehicle identification, law enforcement, and traffic management systems.

III. RELATED WORK AND RESULTS

|

Years |

Authors |

Study |

Proposed method |

Outcome |

|

2020 |

M. G. D. Ogás, R. Fabregat, and S. Aciar |

Survey of smart parking systems |

The proposed method categorizes algorithms in Smart Parking Systems (SPS) into static, dynamic, and real-time types for efficient route planning and parking space utilization. |

SPS show promise, with 50% simulated, 48% proposed, and 2% implemented; dynamic algorithms prevail. |

|

2020 |

Joshua, J. Hendryli, and D. E. Herwindiati |

Automatic license plate recognition for parking system using convolutional neural networks |

Develop a deep learning-based algorithm to address image quality issues and enhance license plate detection using RCNN and CNN models. |

The study implemented an Automatic Number Plate Recognition (ANPR) system using YOLO detector for license plate location. |

|

2020 |

N. Darapaneni, K. Mogeraya, S. Mandal et al. |

Computer vision based license plate detection for automated vehicle parking management system |

Utilized a self-collected dataset of 2,528 Saudi car license plate images, divided into training, validation, and testing sets. Trained and fine-tuned YOLOv5x, YOLOv7x, and YOLOv8x frameworks with specified hyperparameters. |

YOLOv5x exhibited superior performance in license plate detection with mAP@0.5 of 0.994 and mAP@0.95 of 0.892, leading to its selection for the final approach. |

|

2022 |

R. Nithya, V. Priya, C. S. Kumar, J. Dheeba, and K. A. Chandraprabha |

Smart parking system: an IoT based computer vision approach for free parking spot detection using faster R-CNN with YOLOv3 method |

Utilizes existing surveillance cameras and a dataset for Saudi license plate detection and recognition using YOLO and a novel CNN architecture, integrated into a web-based dashboard. |

Offers a cost-effective solution for Smart Parking Systems, achieving high accuracy and real-time visualization for enhanced smart city efficiency.

|

|

2022 |

B. Budihala, T. Iva?cu, and S. tefaniga. |

Motorage—computer vision-based self-sufficient smart parking system |

Leveraging existing surveillance cameras and a self-collected dataset, our approach combines YOLOv5 and YOLOv8 for accurate license plate detection and character recognition. |

Outperforming single-stage methods with 96.1% accuracy, our integrated system enhances smart city efficiency and sustainability. |

IV. ALGORITHM

The YOLO (You Only Look Once) algorithm series revolutionizes object detection in images and videos. YOLOv8, an evolution from its predecessors, enhances accuracy, speed, and adaptability.Retaining the core principles of YOLO, YOLOv8 introduces refined architectural improvements and optimization techniques. Its network architecture undergoes meticulous refinement, incorporating deeper layers and intricate structures to improve feature representation and extraction, enhancing detection accuracy.

YOLOv8 leverages transfer learning and fine-tuning strategies, utilizing pre-trained models to expedite training and adapt efficiently to various object recognition tasks and datasets. This approach ensures robust performance without the need for extensive training from scratch.

Furthermore, YOLOv8 prioritizes computational efficiency. Through optimization of network architecture and algorithmic intricacies, it achieves accelerated processing speeds while maintaining high accuracy. This efficiency enables real-time deployment across applications, from surveillance systems to autonomous vehicles.

In summary, YOLOv8 embodies advancements in deep learning, computer vision, and neural network architecture. By integrating refined designs, transfer learning, and computational optimizations, YOLOv8 sets new standards in object detection, empowering applications with unparalleled accuracy and efficiency.

EasyOCR is a Python-based optical character recognition (OCR) library renowned for its simplicity and effectiveness in extracting text from images. Leveraging deep learning algorithms, EasyOCR offers seamless integration into Python applications, enabling users to extract text from various sources, including images, scanned documents, and screenshots. With support for multiple languages and robust performance across diverse fonts and styles.

EasyOCR has emerged as a versatile tool for tasks ranging from digitizing documents to automating data entry processes. Its intuitive API and minimal setup requirements make it accessible to both novice and experienced developers, positioning EasyOCR as a go-to solution for text extraction needs in research, industry, and beyond.

References

[1] R. Nithya, V. Priya, C. S. Kumar, J. Dheeba, and K. A. Chandraprabha, “Smart parking system: an IoT based computer vision approach for free parking spot detection using faster R-CNN with YOLOv3 method,” Wireless Personal Communications, vol. 125, no. 4, pp. 3205–3225, 2022. [2] M. G. D. Ogás, R. Fabregat, and S. Aciar, “Survey of smart parking systems,” Applied Sciences, vol. 10, no. 3872, 2020. [3] C. J. Rodier, S. A. Shaheen, and C. Kemmerer, “Smart parking management field test: a Bay Area rapid transit (BART) district parking demonstration,” University of California, Berkeley, Final report, 2008. [4] M. Y. I. Idris, Y. Y. Leng, E. M. Tamil, N. M. Noor, and Z. Razak, “Car park system: a review of smart parking system and its technology,” Information Technology Journal, vol. 8, no. 2, pp. 101–113, 2009. [5] A. Fahim, M. Hasan, and M. A. Chowdhury, “Smart parking systems: comprehensive review based on various aspects,” Heliyon, vol. 7, no. 5, Article ID e07050, 2021. [6] C.-F. Chien, H.-T. Chen, and C.-Y. Lin, “A low-cost on-street parking management system based on bluetooth beacons,” Sensors, vol. 20, no. 16, Article ID 4559, 2020. [7] B. Budihala, T. Iva?cu, and S. tefaniga, “Motorage—computer vision-based self-sufficient smart parking system,” in Proceedings of the 2022 24th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), pp. 250–257, IEEE, Linz, Austria, 2022. [8] M. Dixit, C. Srimathi, R. Doss, S. Loke, and M. Saleemdurai, “Smart parking with computer vision and iot technology,” in Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), pp. 170– 174, IEEE, Italy, 2020. [9] Joshua, J. Hendryli, and D. E. Herwindiati, “Automatic license plate recognition for parking system using convolutional neural networks,” in Proceedings of the 2020 International Conference on Information Management and Technology (ICIMTech), pp. 71–74, IEEE, Indonesia, 2020. [10] N. Darapaneni, K. Mogeraya, S. Mandal et al., “Computer vision based license plate detection for automated vehicle parking management system,” in Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics Mobile Communication Conference (UEMCON), pp. 0800–0805, IEEE, New York, 2020.

Copyright

Copyright © 2024 Ravi Khanna, Shreyash Kumar Sahoo, Mr. Sunil Maggu, Ms. Seema Kalonia, Mr. Ajay Kumar Kaushik. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60807

Publish Date : 2024-04-22

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online